Exploratory Data Analysis

Overview

Section titled “Overview”Exploratory Data Analysis (EDA) is a critical first step in any data mining project. For frequent itemset mining, EDA helps us understand the dataset characteristics, identify preprocessing requirements, and make informed decisions about algorithm parameters such as minimum support thresholds.

Data Inspection

Section titled “Data Inspection”Dataset Overview

Section titled “Dataset Overview”The experiments use the Amazon Reviews 2023 dataset from Hugging Face, which contains product reviews across multiple categories:

- Source: Hugging Face Datasets - McAuley-Lab/Amazon-Reviews-2023

- Categories Used: Appliances, Digital Music, Health and Personal Care, Handmade Products, All Beauty

- Total Records: Over 4 million review records across all categories

- Data Format: JSONL (JSON Lines) format with one review per line

Dataset Features

Section titled “Dataset Features”The dataset contains the following key features (variables):

Categorical Variables

Section titled “Categorical Variables”user_id(string): Unique identifier for each user/reviewerasin(string): Amazon Standard Identification Number - unique product identifierparent_asin(string, nullable): Parent product identifier for product variantscategory(string): Product category (when combining multiple categories)verified_purchase(boolean): Whether the purchase was verified

Numerical Variables

Section titled “Numerical Variables”overall(float): Rating score (typically 1-5)helpful(list): Helpful vote countsunix_review_time(integer): Unix timestamp of review

Text Variables

Section titled “Text Variables”review_text(string): Review contentsummary(string): Review summary

Basic Statistics

Section titled “Basic Statistics”The EDA process reveals key statistics about the dataset:

Record-Level Statistics

Section titled “Record-Level Statistics”- Total Records: 4,118,850 reviews (combined categories)

- Verified Purchases: Percentage varies by category, typically 60-80% of reviews

- Unique Users: Hundreds of thousands of unique reviewers

- Unique Products (ASIN): Tens of thousands of individual products

- Unique Product Groups (Parent ASIN): Fewer than individual ASINs, enabling product grouping

Transaction-Level Statistics

Section titled “Transaction-Level Statistics”After converting reviews to transactions (grouping by user):

- Total Transactions: Varies based on preprocessing parameters

- Average Transaction Size: Typically 2-3 items per user

- Transaction Size Range: From 1 item to dozens of items

- Unique Items: Thousands to tens of thousands depending on category and grouping strategy

Missing Values

Section titled “Missing Values”The dataset contains several types of missing values:

-

parent_asin: Many products don’t have a parent ASIN (null values)- Handling: Fallback to

asinwhenparent_asinis null - Impact: Affects product grouping strategy

- Handling: Fallback to

-

verified_purchase: Some records may have null values- Handling: Filtered to only include verified purchases (

verified_purchase == True) - Rationale: Ensures data quality and reduces noise from unverified reviews

- Handling: Filtered to only include verified purchases (

-

user_id: Rarely missing, but critical for transaction creation- Handling: Records with missing

user_idare excluded from transaction creation

- Handling: Records with missing

Visualizations

Section titled “Visualizations”The EDA process generates comprehensive visualizations to understand data patterns:

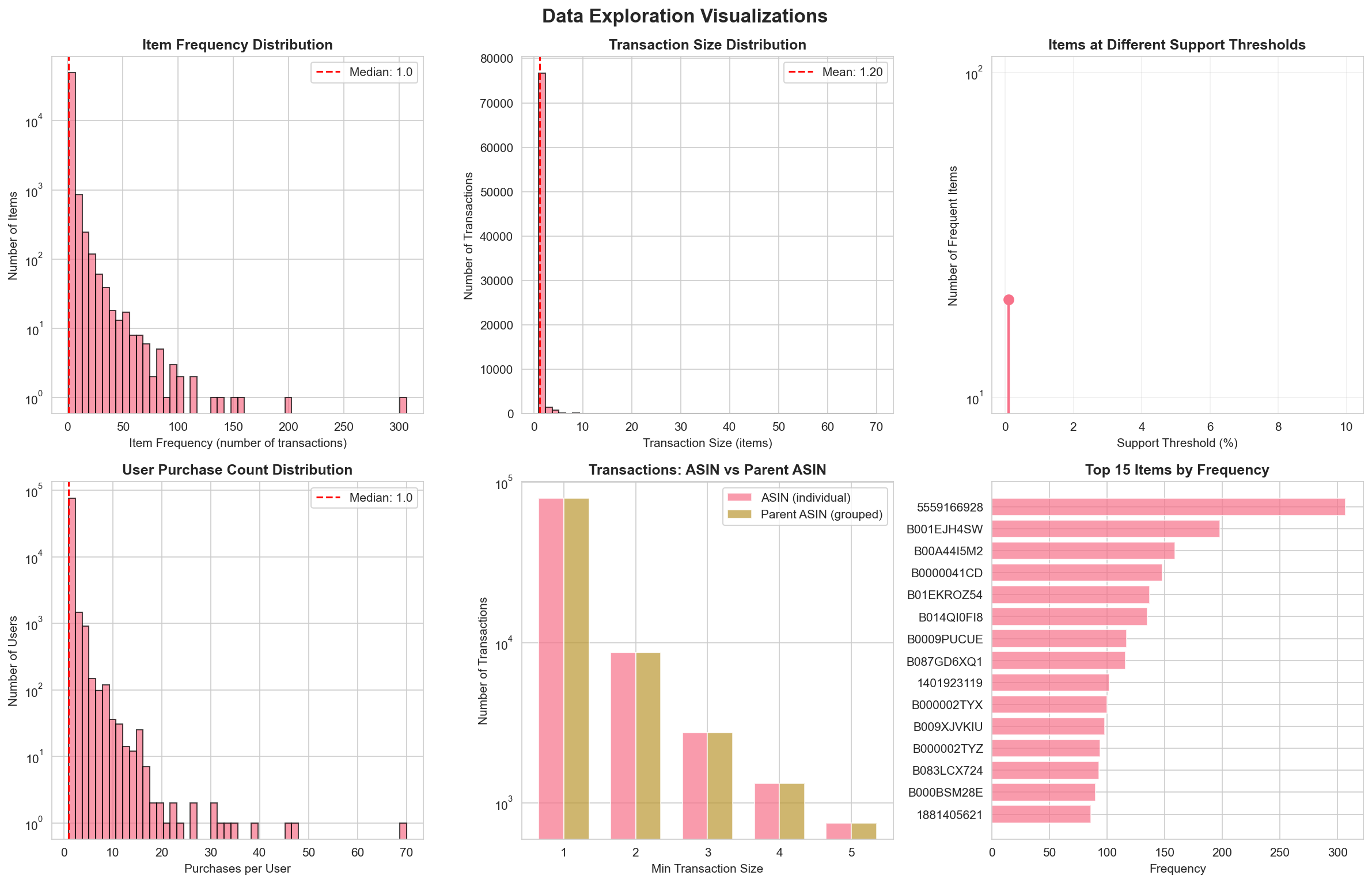

Comprehensive data exploration visualization showing dataset statistics, user/product patterns, transaction configurations, item frequency analysis, and transaction size distributions.

Key Visualizations

Section titled “Key Visualizations”1. Item Frequency Distribution

Section titled “1. Item Frequency Distribution”- Purpose: Understand how items are distributed across transactions

- Insights:

- Most items appear infrequently (long tail distribution)

- Few items appear in many transactions (power law distribution)

- Helps determine appropriate minimum support thresholds

2. Transaction Size Distribution

Section titled “2. Transaction Size Distribution”- Purpose: Understand the distribution of items per transaction

- Insights:

- Most transactions contain 1-5 items

- Average transaction size guides preprocessing decisions

- Helps set

min_transaction_sizeparameter

3. Items at Different Support Thresholds

Section titled “3. Items at Different Support Thresholds”- Purpose: Determine how many frequent itemsets would be found at various support levels

- Insights:

- Shows exponential decay as support threshold increases

- Helps select appropriate minimum support for algorithm execution

- Critical for balancing computational cost vs. result completeness

4. User Purchase Count Distribution

Section titled “4. User Purchase Count Distribution”- Purpose: Understand user purchasing behavior

- Insights:

- Most users make few purchases (1-2)

- Few users are highly active (power users)

- Affects transaction creation strategy

5. ASIN vs Parent ASIN Comparison

Section titled “5. ASIN vs Parent ASIN Comparison”- Purpose: Compare product granularity strategies

- Insights:

- Parent ASIN grouping reduces item count

- Parent ASIN creates more meaningful product associations

- Helps decide between individual products vs. product groups

6. Top Items by Frequency

Section titled “6. Top Items by Frequency”- Purpose: Identify most popular products

- Insights:

- Reveals best-selling or frequently reviewed products

- Helps understand domain-specific patterns

- Useful for result interpretation

Insights

Section titled “Insights”Key Patterns and Trends

Section titled “Key Patterns and Trends”1. Power Law Distribution

Section titled “1. Power Law Distribution”The dataset exhibits strong power law characteristics:

- Item Frequency: Most items appear in very few transactions, while a small number of items appear in many transactions

- User Activity: Most users make few purchases, while a small number of users are highly active

- Implication: Low minimum support thresholds are necessary to capture meaningful patterns

2. Transaction Sparsity

Section titled “2. Transaction Sparsity”- Observation: Average transaction size is small (2-3 items)

- Implication:

- High-dimensional sparse data

- Traditional Apriori may generate many candidates

- FP-Growth may be more efficient due to tree compression

3. Product Grouping Benefits

Section titled “3. Product Grouping Benefits”- Observation: Using

parent_asininstead ofasin:- Reduces unique item count significantly

- Creates more meaningful associations (product variants grouped together)

- Increases average transaction size

- Implication: Better for association rule mining as it captures product family relationships

4. Support Threshold Sensitivity

Section titled “4. Support Threshold Sensitivity”- Observation: Small changes in support threshold dramatically affect number of frequent itemsets

- Example: Reducing support from 1% to 0.5% may double the number of frequent itemsets

- Implication: Careful threshold selection is critical for algorithm performance

Relationships Between Variables

Section titled “Relationships Between Variables”User-Product Relationships

Section titled “User-Product Relationships”- Pattern: Users typically purchase products within similar categories

- Relevance: Suggests category-based analysis may reveal stronger patterns

- Application: Can be used for recommendation systems

Product Co-occurrence Patterns

Section titled “Product Co-occurrence Patterns”- Pattern: Certain products frequently appear together in transactions

- Relevance: Core of association rule mining

- Application: Market basket analysis, product recommendations

Temporal Patterns

Section titled “Temporal Patterns”- Pattern: Purchase patterns may vary over time (not explored in detail)

- Relevance: Could inform time-sensitive association rules

- Application: Seasonal product recommendations

Preprocessing Decision Support

Section titled “Preprocessing Decision Support”EDA directly informs preprocessing decisions:

-

Minimum Transaction Size:

- Decision: Use

min_transaction_size=2(transactions must have at least 2 items) - Rationale: Single-item transactions don’t contribute to association rules

- Impact: Reduces transaction count but improves quality

- Decision: Use

-

Product Grouping Strategy:

- Decision: Use

parent_asinwhen available, fallback toasin - Rationale: Captures product family relationships, reduces sparsity

- Impact: More meaningful association rules, better algorithm performance

- Decision: Use

-

Minimum Support Threshold:

- Decision: Use very low thresholds (0.05% - 0.5%) for comprehensive analysis

- Rationale: Power law distribution means most items are infrequent

- Impact: Balances completeness vs. computational cost

-

Infrequent Item Filtering:

- Decision: Filter items appearing in fewer than 3 transactions

- Rationale: Removes noise, reduces computational overhead

- Impact: Faster algorithm execution, cleaner results

Implementation

Section titled “Implementation”The EDA process is implemented in the exploration.ipynb notebook and uses the following tools:

Python Libraries

Section titled “Python Libraries”- Polars: Efficient DataFrame operations for large datasets

- NumPy: Statistical calculations

- Matplotlib/Seaborn: Visualization

- Collections: Frequency counting and data structures

Key Functions

Section titled “Key Functions”The preprocessing module (preprocessing.py) provides utility functions for EDA:

filter_verified_purchases(): Filter dataset to verified purchases onlycreate_user_carts(): Group products by user to create transactionsget_transaction_stats(): Calculate transaction-level statisticscalculate_item_frequencies(): Count item frequencies across transactionssuggest_min_support(): Programmatically suggest minimum support thresholds

Example Usage

Section titled “Example Usage”from preprocessing import ( filter_verified_purchases, create_user_carts, get_transaction_stats, calculate_item_frequencies)

# Load datasetdata = load_jsonl_dataset_polars(url)

# Filter verified purchasesverified_data = filter_verified_purchases(data)

# Create user carts (transactions)user_carts = create_user_carts(verified_data, use_parent_asin=True)

# Calculate statisticstransactions = [list(cart) for cart in user_carts.values()]stats = get_transaction_stats(transactions)item_freq = calculate_item_frequencies(transactions)

# Analyze resultsprint(f"Total transactions: {stats['num_transactions']}")print(f"Unique items: {stats['num_unique_items']}")print(f"Average transaction size: {stats['avg_transaction_size']:.2f}")Conclusion

Section titled “Conclusion”Exploratory Data Analysis provides critical insights that guide the entire frequent itemset mining process. By understanding data distributions, relationships, and patterns, we can:

- Make informed preprocessing decisions

- Select appropriate algorithm parameters

- Interpret results meaningfully

- Optimize algorithm performance

The EDA process reveals that the Amazon Reviews dataset is characterized by high sparsity, power law distributions, and small transaction sizes—all factors that influence algorithm selection and parameter tuning for frequent itemset mining.